I wanted my algorithm to be similarly capable of changing the level of detail in the description.

Humans can easily give a more or less detailed description of the same data, and have little trouble deciding what details can and cannot be ignored. I wanted my algorithm to be able to think of very close points as being essentially the same in other words it should be robust to noise. However, describing the graph in this way would be extremely tedious. As such every fluctuation is recorded, no matter how small. The standard way that the computer represents this data is to hold the exact value of every point. I wanted my algorithm to be able to distinguish local and global features. If a person were to describe the data pictured above, Apple stock from 2010 until now, they would likely say that there is a general upward trend, with a few significant peaks. Here I will describe some of the design principles that informed my approach: Local vs. This is an extremely open-ended problem, and could be tackled in a number of ways. How exactly do you get a general description from specific data points? Principles This is much harder for computers specifically the idea of a "general" description is something that is not well defined. Humans can look at a plot of data and come up with a general description of what it's doing with very little effort.

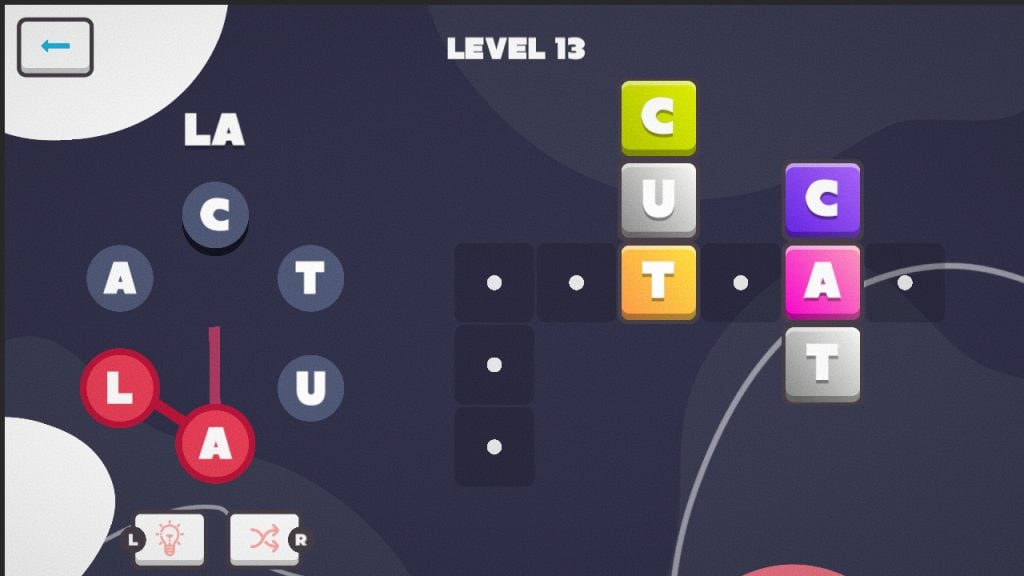

Wordify level 18 series#

The goal of this project was to come up with an algorithm to describe time series data in natural language.

Wolfram Knowledgebase Curated computable knowledge powering Wolfram|Alpha. Wolfram Universal Deployment System Instant deployment across cloud, desktop, mobile, and more. Wolfram Data Framework Semantic framework for real-world data.

0 kommentar(er)

0 kommentar(er)